Project title:

Shaping the Imagination: Real-time visual dialogue with AI

Shaping the Imagination: Real-time visual dialogue with AI

Objective:

Explore a method of real-time co-creation between humans and artificial intelligence, using simple gestures filmed from above. Test how generated images can enrich creative or playful processes.

Working hypothesis:

Everything captured by the camera can become interpretable material for the AI. By adjusting transformation settings and carefully crafting prompts, it is possible to guide the AI into a cooperative relationship where it amplifies or reinterprets human gestures with nuance.

Analytical focus:

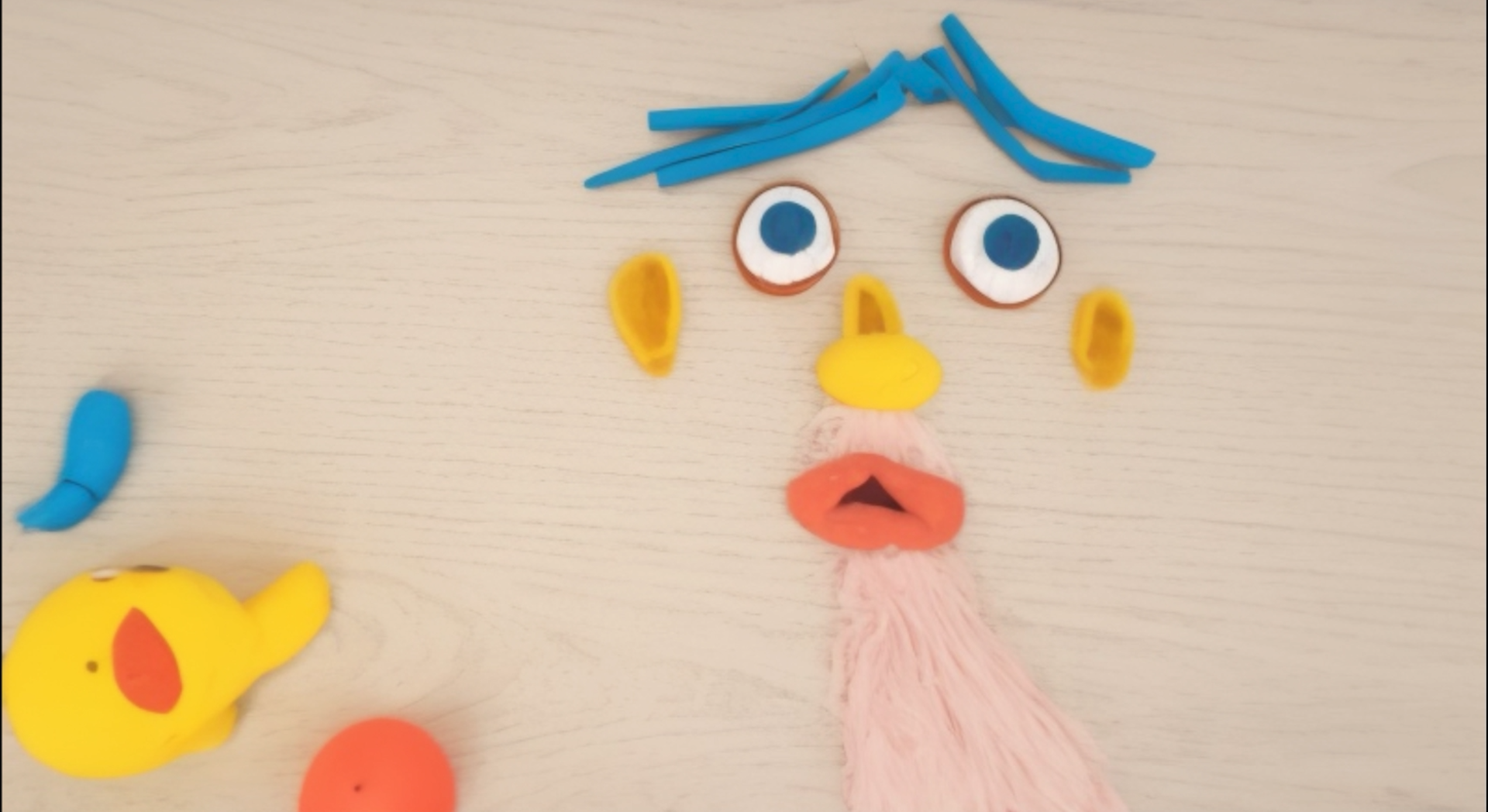

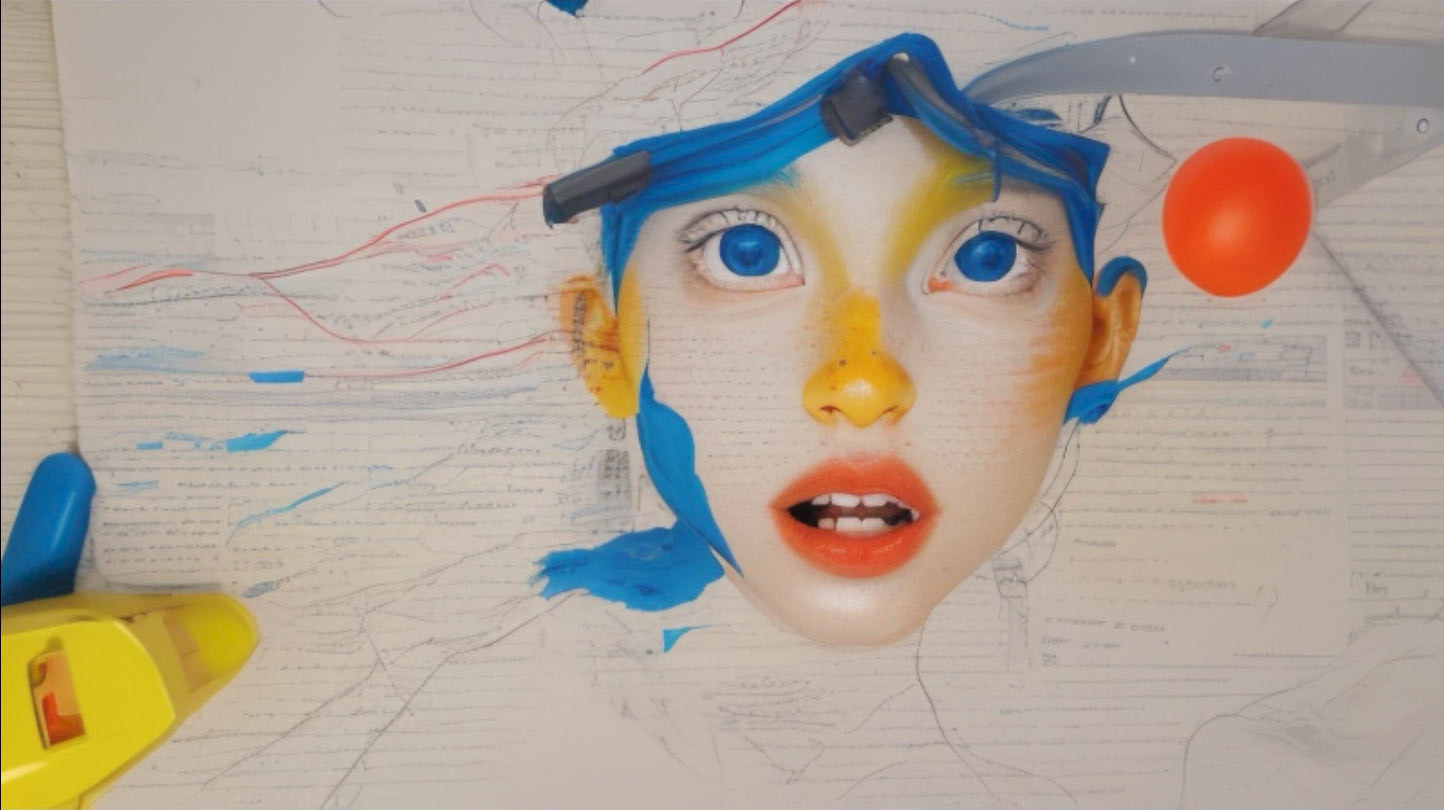

Study the effects of physical forms (clay, hands, objects) interacting with AI-generated visuals. Observe visual interference, interpretative shifts, and how these “accidents” can become creative drivers. Also analyze the potential for use in brainstorming, education, or rapid visual prototyping.

Expected outcome:

Produce a video demonstration documenting the protocol, settings, and results. Highlight the potential of this approach as a co-imagination tool, particularly for design, narrative creation, or conceptual animation. Establish the basis for an accessible method blending physical manipulation and live image generation (clay modeling, sketching, object manipulation).

Explore a method of real-time co-creation between humans and artificial intelligence, using simple gestures filmed from above. Test how generated images can enrich creative or playful processes.

Working hypothesis:

Everything captured by the camera can become interpretable material for the AI. By adjusting transformation settings and carefully crafting prompts, it is possible to guide the AI into a cooperative relationship where it amplifies or reinterprets human gestures with nuance.

Analytical focus:

Study the effects of physical forms (clay, hands, objects) interacting with AI-generated visuals. Observe visual interference, interpretative shifts, and how these “accidents” can become creative drivers. Also analyze the potential for use in brainstorming, education, or rapid visual prototyping.

Expected outcome:

Produce a video demonstration documenting the protocol, settings, and results. Highlight the potential of this approach as a co-imagination tool, particularly for design, narrative creation, or conceptual animation. Establish the basis for an accessible method blending physical manipulation and live image generation (clay modeling, sketching, object manipulation).

Titre du projet :

Modeler l’imaginaire à deux mains: dialogue visuel en temps réel avec l’IA

Modeler l’imaginaire à deux mains: dialogue visuel en temps réel avec l’IA

Objective :

Explorer une méthode de co-création en temps réel entre l’humain et l’intelligence artificielle, à partir d’interactions gestuelles simples filmées à plat (manipulation de plasticine, croquis, objets). Tester comment les images générées peuvent enrichir le processus créatif ou ludique.

Hypothèse de travail :

Tout ce que capte la caméra peut devenir une matière interprétable par l’IA. En ajustant les réglages de transformation et en contrôlant les prompts, il est possible de guider l’IA dans une relation de coopération où elle amplifie ou réinterprète nos gestes avec sensibilité.

Angle d’analyse :

Étudier les effets de la cohabitation entre formes physiques (plasticine, mains, objets) et interprétations visuelles générées par l’IA. Observer les interférences visuelles, les glissements d’interprétation et la manière dont ces accidents peuvent devenir des vecteurs créatifs. Analyser aussi les possibilités d’usage en contexte de brainstorming, d’éducation ou de prototypage visuel rapide.

Résultat attendu :

Poser les bases d’une méthode accessible pour hybrider manipulation physique et génération d’images en direct (plasticine, croquis, objets).

Explorer une méthode de co-création en temps réel entre l’humain et l’intelligence artificielle, à partir d’interactions gestuelles simples filmées à plat (manipulation de plasticine, croquis, objets). Tester comment les images générées peuvent enrichir le processus créatif ou ludique.

Hypothèse de travail :

Tout ce que capte la caméra peut devenir une matière interprétable par l’IA. En ajustant les réglages de transformation et en contrôlant les prompts, il est possible de guider l’IA dans une relation de coopération où elle amplifie ou réinterprète nos gestes avec sensibilité.

Angle d’analyse :

Étudier les effets de la cohabitation entre formes physiques (plasticine, mains, objets) et interprétations visuelles générées par l’IA. Observer les interférences visuelles, les glissements d’interprétation et la manière dont ces accidents peuvent devenir des vecteurs créatifs. Analyser aussi les possibilités d’usage en contexte de brainstorming, d’éducation ou de prototypage visuel rapide.

Résultat attendu :

Poser les bases d’une méthode accessible pour hybrider manipulation physique et génération d’images en direct (plasticine, croquis, objets).

The AI becomes a creative partner, a playful mirror reacting to my gestures with unexpected suggestions

Everything the camera sees becomes material for interpretation.

In this process, nothing escapes the camera’s eye. Every element it captures, like tools, textures, scraps, shadows, or nearby objects — becomes interpretable. The AI doesn’t distinguish between what’s intentional and what’s not. It transforms, reinvents, and reimagines. When my hands appear while manipulating the clay, they can interfere with the AI’s understanding of the shape. Sometimes, their outlines blend with the object, producing strange or unexpected results. A finger becomes a beak; a palm turns into a face. These visual “accidents” are not mistakes, they open up new creative paths, where ambiguity becomes a playground.

In this process, nothing escapes the camera’s eye. Every element it captures, like tools, textures, scraps, shadows, or nearby objects — becomes interpretable. The AI doesn’t distinguish between what’s intentional and what’s not. It transforms, reinvents, and reimagines. When my hands appear while manipulating the clay, they can interfere with the AI’s understanding of the shape. Sometimes, their outlines blend with the object, producing strange or unexpected results. A finger becomes a beak; a palm turns into a face. These visual “accidents” are not mistakes, they open up new creative paths, where ambiguity becomes a playground.

Each frame is interpreted in near real time, about one image / second on my single RTX 4070 Ti - This video are 500% up speed